blog

Data Quality Must Be Actionable to Be Sustainable

SECTIONS

Studies show that data-driven organizations outperform their competitors 23 times overOpens in a new tab and have an average annual growth rate of 30%. To access this value and derive actionable insights, organizations must develop sophisticated data management strategies and maintain high data quality.

The importance of this trend has not gone unnoticed in the healthcare industryOpens in a new tab. Current estimates project that the global market for data analytics and data management technologies in healthcare will quadruple in size during this decade, growing from $23.94 billionOpens in a new tab in 2020 to $101.7 billion by the end of 2030.

As investments continue to flow into new data-focused IT initiatives, healthcare decision-makers need to understand how to use these technologies to generate actionable insights that improve patient care and drive value.

In this guide, you’ll learn about the concept of actionable data insights and how to create data quality systems that support them.

Key Takeaways:

- Data management can transform raw data into a valuable and actionable resource in a wide variety of industries, including healthcare.

- To guide decision-making processes, data must undergo processing to yield consistent, relevant, and comprehensive information.

- Organizations can achieve actionable data quality by implementing data management for infrastructure, formatting, and integration.

What Is Actionable Data?

Daily human activities online, such as e-commerce, communications, and social media interactions, generate vast amounts of stored data. Today’s data scientists now measure the global data sphere in zettabytes – equivalent to a trillion gigabytes or a 1 followed by 21 zeros. In 2022, the global data sphere reached a volume of 97 zettabytesOpens in a new tab – double the 2019 volume of 41 – and will double again by the end of 2026Opens in a new tab.

However, most of this data – 80-90%Opens in a new tab – is unstructured, inaccessible, and unusable for the organizations that store it. To turn this raw data into useful information to guide decision-making, it must undergo processing that renders it accessible and standardized. Processing involves multiple steps that reduce discrepancies, apply formatting, and connect user-facing applications to various back-end systems.

In effective data management systems, the result of these processes is data that provides answers to questions. For example, systems that allow users to calculate an organization’s KPIs by different departments, intervals of time, or as adjusted for custom variables answer questions that can guide actions such as hiring or resource allocation. The more an organization’s data is accessible and reliable for users with different purposes, the more actionable it becomes.

How to Create Actionable Data

To transform raw data into actionable insights, organizations must develop a sufficiently integrated IT infrastructure and adopt data management practices that yield high data quality. This process involves four focus areas.

1. Infrastructure

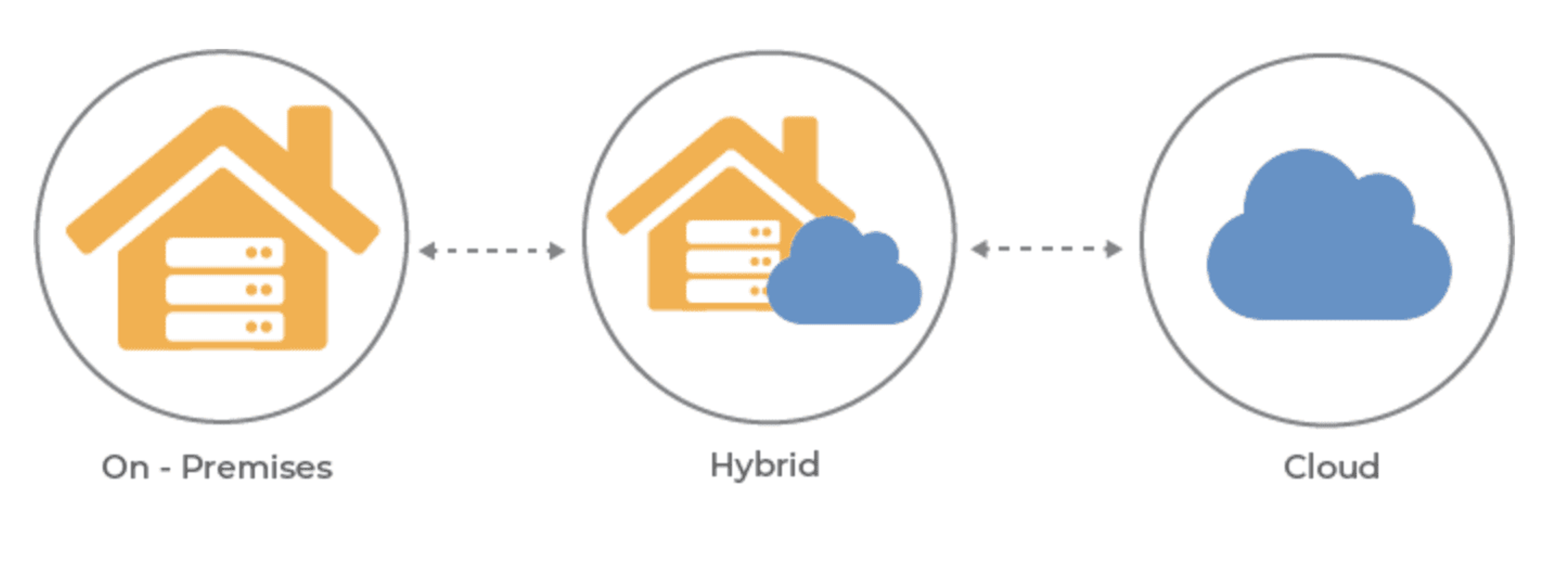

Image Source: Arkatechture

Enabling data analysis begins in the systems that capture and store an organization’s data. In modern organizations, these systems are diverse and include:

- On-premises databases and owned storage hardware

- Data warehouses and lakes

- Cloud services and applications

- Personal electronic devices, including computers, tablets, and smartphones

- Internet of things (IoT) devices

In large organizations, many of these systems exist in redundancy across departments and physical locations. For example, commonly accessed databases in applications run on personal electronics may accumulate thousands of separate instances across users’ devices. Alternatively, pay-as-you-go systems such as cloud services may run underfunded and fail to capture the necessary data.

At the level of infrastructure, enhancing raw data to actionable quality requires auditing physical and virtual data stores. With an understanding of data distribution across different technologies, IT teams can begin to implement tools to filter redundancies and expand overtasked services.

2. Data Quality

In data management, data quality measures how easily users can obtain reliable data that matches their purposes. High data quality is not determined by a single metric, such as true vs false or fast vs slow. For example, a returned value of “Paris is the capital of France” is true but of poor quality if the query took hours to return or if the question was “What are the geographic coordinates of Paris, France?”

Data quality involves multiple characteristics that need to be considered simultaneously. In many organizations, these include:

- Accuracy or correctness

- Accessibility or availability

- Completeness

- Consistency or coherence

- Relevance

- Timeliness or latency

3. Data Integration

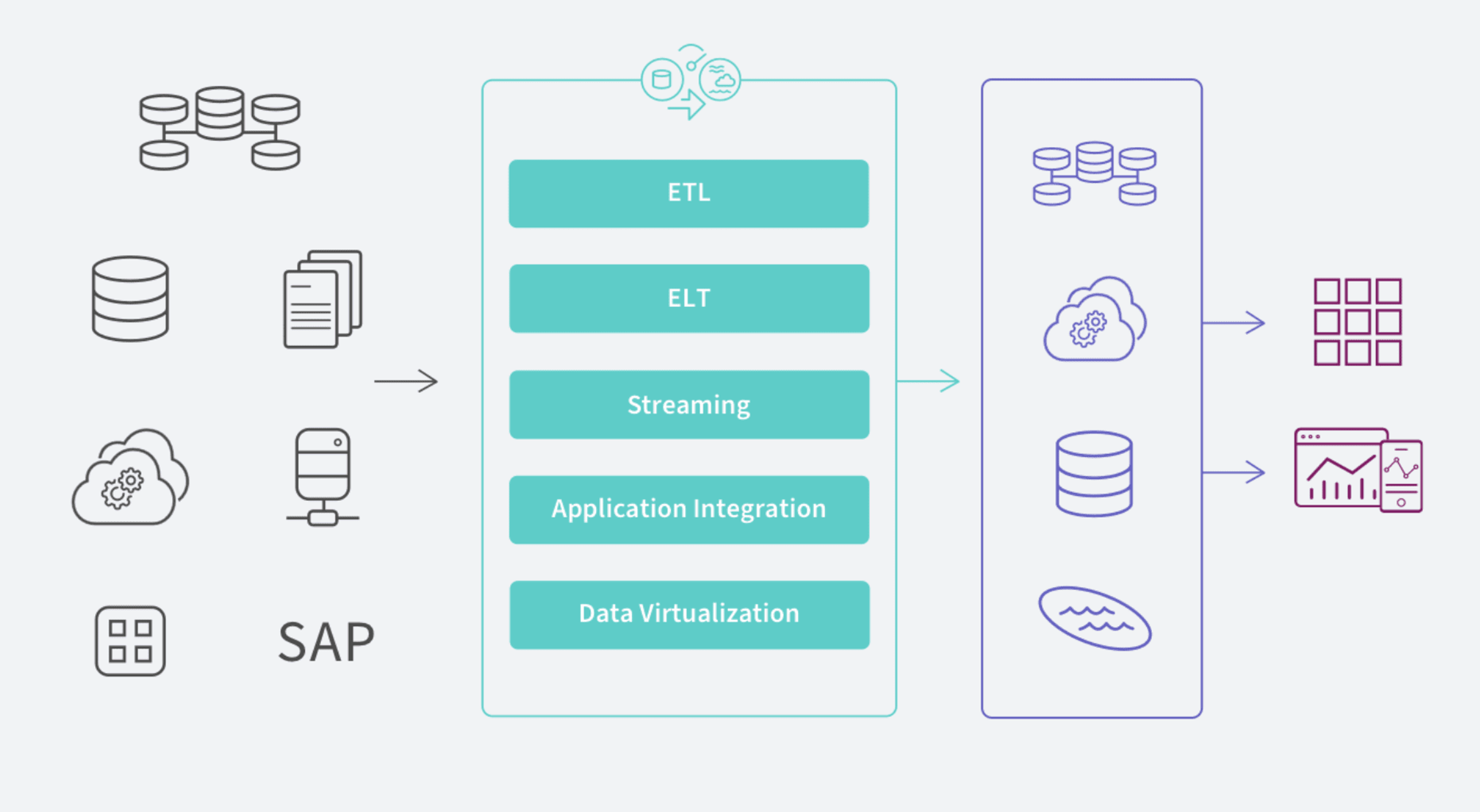

Image Source: Qlik

Data silos refer to systems in an IT environment that cannot communicate. In heavily siloed environments, users cannot see the full picture from any one point. For example, in commercial organizations, different teams, such as sales or marketing may both create customer or client accounts that include contact information, activity logs, or purchase histories. However, if these teams rely on siloed front-end applications, users in either system will not be able to access comprehensive records such as total active accounts or total records for a single account.

Data integration is the process of removing data silos in complex systems. In modern IT environments, there are five data integration methods.

Key Takeaways:

- Extract, Transform, Load (ETL): Extracts data from different sources into a staging application for transformation and then load it into a centralized repository, such as a data warehouse.

- Extract, Load, Transform (ELT): Extracts data directly to a centralized repository and performs transformations there.

- Streaming: Instead of integrating data in batches, streaming methods pull individual files continuously from contributing systems.

- Application Integration: Syncs data in separate applications via a shared back-end architecture or application programming interface (API).

- Data Virtualization: Like streaming, virtualization integrates data without batching but only for specific requests.

4. Predictive Modeling

In large volumes, even processed data requires additional tools to serve practical purposes. Predictive modeling refers to the use of algorithms, artificial intelligence systems, and machine learning techniques to predict the behavior of complex systems based on past behavior. With predictive modeling tools, organizations can unlock deeper insights than manual spreadsheet analysis allows.

Healthcare Master Data Management with Coperor by Gaine

Coperor is a scalable master data management platform to address the challenges of integrating, analyzing, and protecting healthcare data. With capabilities for out-of-the-box data integration across complex organizations and their contracted partners, Coperor delivers unrivaled time-to-value for healthcare data management systems.

To learn more about Coperor, watch this demo and contact Gaine today.